RSS + SNS 프로젝트

2014년 10월 26일 일요일

[성공] Twitter + R + Mavericks + WordCloud

# installed the newest version of the twitteR package from github.

install.packages(c("devtools", "rjson", "bit64", "httr"))

#RESTART R session!

library(devtools)

install_github("twitteR", username="geoffjentry")

library(twitteR)

#

api_key <- "YOUR API KEY"

api_secret <- "YOUR API SECRET"

access_token <- "YOUR ACCESS TOKEN"

access_token_secret <- "YOUR ACCESS TOKEN SECRET"

setup_twitter_oauth(api_key,api_secret,access_token,access_token_secret)

#

searchTwitter("iphone")

Step 1: Load all the required packages

library(twitteR)

library(tm)

library(wordcloud)

library(RColorBrewer)

Step 2: Let's get some tweets in english containing the words "machine learning"

mach_tweets = searchTwitter("machine learning", n=500, lang="en")

Step 3: Extract the text from the tweets in a vector

mach_text = sapply(mach_tweets, function(x) x$getText())

Step 4: Construct the lexical Corpus and the Term Document Matrix

We use the functionCorpus to create the corpus, and the functionVectorSource to indicate that the text is in the character vector mach_text. In order to create the term-document matrix we apply different transformation such as removing numbers, punctuation symbols, lower case, etc.

# create a corpusmach_corpus = Corpus(VectorSource(mach_text))# create document term matrix applying some transformationstdm = TermDocumentMatrix(mach_corpus, control = list(removePunctuation = TRUE, stopwords = c("machine", "learning", stopwords("english")), removeNumbers = TRUE, tolower = TRUE))

Step 5: Obtain words and their frequencies

# define tdm as matrixm = as.matrix(tdm)# get word counts in decreasing orderword_freqs = sort(rowSums(m), decreasing=TRUE) # create a data frame with words and their frequenciesdm = data.frame(word=names(word_freqs), freq=word_freqs)

Step 6: Let's plot the wordcloud

# plot wordcloudwordcloud(dm$word, dm$freq, random.order=FALSE, colors=brewer.pal(8, "Dark2"))# save the image in png formatpng("MachineLearningCloud.png", width=12, height=8, units="in", res=300)wordcloud(dm$word, dm$freq, random.order=FALSE, colors=brewer.pal(8, "Dark2"))dev.off()2014년 10월 24일 금요일

트위터 접근

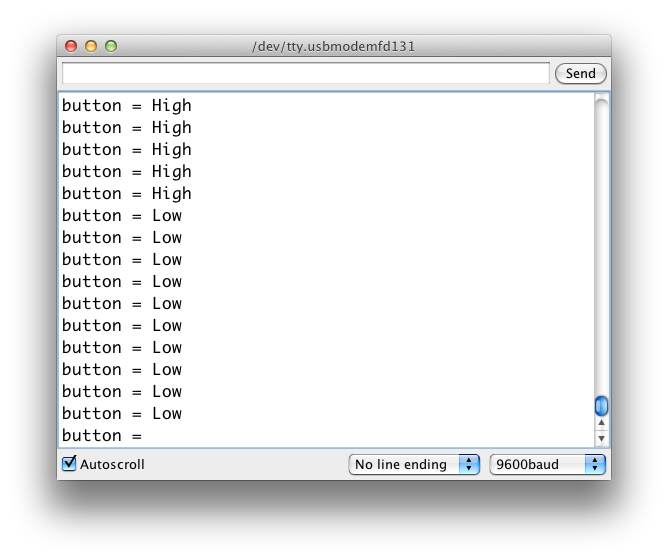

// Arduino ----------------------------------------------

// simpleTweet_00_a

/*

simpleTweet_00 arduino sketch (for use with

simpleTweet_00 processing sketch) by @msg_box june2011

This script is intended for use with a magnetic reed switch,

but any on/off switch plugged into pin #10 will readily work.

The Arduino is connected to a circuit with a sensor that

triggers the code: Serial.write(n); where n = 1 or 2.

The Processing sketch listens for that message and then

uses the twitter4j library to connect to Twitter

via OAuth and post a tweet.

To learn more about arduino, processing, twitter4j,

OAuth, and registering your app with Twitter...

visit <http://www.instructables.com/id/Simple-Tweet-Arduino-Processing-Twitter/>

visit <http://www.twitter.com/msg_box>

This code was made possible and improved upon with

help from people across the internet. Thank You.

Special shoutouts to the helpful lurkers at twitter4j,

arduino, processing, and bloggers everywhere, and

to the adafruit & ladydada crowdsource.

And above all, to my lovely wife, without

whom, none of this would have been possible.

Don't be a dick.

*/

const int magReed_pin = 2; // pin number

const int ledPin = 13;

int magReed_val = 0;

int currentDoorState = 1; // begin w/ circuit open

int previousDoorState = 1;

void setup(){

Serial.begin(9600);

pinMode(magReed_pin, INPUT);

pinMode(ledPin, OUTPUT);

}

void loop(){

watchTheDoor();

}

void watchTheDoor(){

magReed_val = digitalRead(magReed_pin);

if (magReed_val == LOW){ // open

currentDoorState = 1;

digitalWrite(ledPin, LOW);

}

if (magReed_val == HIGH){ // closed

currentDoorState = 2;

digitalWrite(ledPin, HIGH);

}

compareStates(currentDoorState);

}

void compareStates(int i){

if (previousDoorState != i){

previousDoorState = i;

Serial.write(i);

delay(1000); //

}

}

// Processing -------------------------------

import processing.serial.*;

import twitter4j.conf.*;

import twitter4j.internal.async.*;

import twitter4j.internal.org.json.*;

import twitter4j.internal.logging.*;

import twitter4j.http.*;

import twitter4j.api.*;

import twitter4j.util.*;

import twitter4j.internal.http.*;

import twitter4j.*;

static String OAuthConsumerKey =

static String OAuthConsumerSecret =

static String AccessToken =

static String AccessTokenSecret =

Serial arduino;

Twitter twitter = new TwitterFactory().getInstance();

void setup() {

size(125, 125);

frameRate(10);

background(0);

println(Serial.list());

String arduinoPort = Serial.list()[4]; // Your Serial Port !!!

arduino = new Serial(this, arduinoPort, 9600);

loginTwitter();

}

void loginTwitter() {

twitter.setOAuthConsumer(OAuthConsumerKey, OAuthConsumerSecret);

AccessToken accessToken = loadAccessToken();

twitter.setOAuthAccessToken(accessToken);

}

private static AccessToken loadAccessToken() {

return new AccessToken(AccessToken, AccessTokenSecret);

}

void draw() {

background(0);

text("iLMS Tweet", 15, 45);

text("@bemoredev", 30, 70);

listenToArduino();

}

void listenToArduino() {

String msgOut = "";

int arduinoMsg = 0;

if (arduino.available() >= 1) {

arduinoMsg = arduino.read();

if (arduinoMsg == 1) {

msgOut = "LED1 On at "+hour()+":"+minute()+":"+second();

}

if (arduinoMsg == 2) {

msgOut = "LED1 Off at "+hour()+":"+minute()+":"+second();

}

compareMsg(msgOut); // this step is optional

// postMsg(msgOut);

}

}

void postMsg(String s) {

try {

Status status = twitter.updateStatus(s);

println("new tweet --:{ " + status.getText() + " }:--");

}

catch(TwitterException e) {

println("Status Error: " + e + "; statusCode: " + e.getStatusCode());

}

}

void compareMsg(String s) {

// compare new msg against latest tweet to avoid reTweets

java.util.List statuses = null;

String prevMsg = "";

String newMsg = s;

try {

statuses = twitter.getUserTimeline();

}

catch(TwitterException e) {

println("Timeline Error: " + e + "; statusCode: " + e.getStatusCode());

}

Status status = (Status)statuses.get(0);

prevMsg = status.getText();

String[] p = splitTokens(prevMsg);

String[] n = splitTokens(newMsg);

//println("("+p[0]+") -> "+n[0]); // debug

if (p[0].equals(n[0]) == false) {

postMsg(newMsg);

}

//println(s); // debug

}

R을 이용해서 트위터 워드 클라우드 만들기.

#install the necessary packages

install.packages("ROAuth")

install.packages("twitteR")

install.packages("wordcloud")

install.packages("tm")

library("ROAuth")

library("twitteR")

library("wordcloud")

library("tm")

#necessary step for Windows

download.file(url="http://curl.haxx.se/ca/cacert.pem", destfile="cacert.pem")

#to get your consumerKey and consumerSecret see the twitteR documentation for instructions

cred <- OAuthFactory$new(consumerKey='secret',

consumerSecret='secret',

requestURL='https://api.twitter.com/oauth/request_token',

accessURL='http://api.twitter.com/oauth/access_token',

authURL='http://api.twitter.com/oauth/authorize')

#necessary step for Windows

cred$handshake(cainfo="cacert.pem")

#save for later use for Windows

save(cred, file="twitter authentication.Rdata")

registerTwitterOAuth(cred)

#the cainfo parameter is necessary on Windows

r_stats<- searchTwitter("#Rstats", n=1500, cainfo="cacert.pem")

#save text

r_stats_text <- sapply(r_stats, function(x) x$getText())

#create corpus

r_stats_text_corpus <- Corpus(VectorSource(r_stats_text))

#clean up

r_stats_text_corpus <- tm_map(r_stats_text_corpus, tolower)

r_stats_text_corpus <- tm_map(r_stats_text_corpus, removePunctuation)

r_stats_text_corpus <- tm_map(r_stats_text_corpus, function(x)removeWords(x,stopwords()))

wordcloud(r_stats_text_corpus)

twitter r word cloud macosx

One R Tip A Day

A word cloud (or tag cloud) can be an handy tool when you need to highlight the most commonly cited words in a text using a quick visualization. Of course, you can use one of the several on-line services, such as wordle ortagxedo , very feature rich and with a nice GUI. Being an R enthusiast, I always wanted to produce this kind of images within R and now, thanks to the recently released Ian Fellows' wordcloud package, finally I can!

In order to test the package I retrieved the titles of the XKCD web comics included in myRXKCD package and produced a word cloud based on the titles' word frequencies calculated using the powerful tm package for text mining (I know, it is like killing a fly with a bazooka!).

As a second example, inspired by this post from the eKonometrics blog, I created a word cloud from the description of 3177 available R packages listed at http://cran.r-project.org/web/packages.

As a third example, thanks to Jim's comment, I take advantage of Duncan Temple Lang's RNYTimes package to access user-generate content on the NY Times and produce a wordcloud of 'today' comments on articles.

Caveat: in order to use the RNYTimes package you need a API key from The New York Times which you can get by registering to the The New York Times Developer Network (free of charge) from here.

In order to test the package I retrieved the titles of the XKCD web comics included in myRXKCD package and produced a word cloud based on the titles' word frequencies calculated using the powerful tm package for text mining (I know, it is like killing a fly with a bazooka!).

library(RXKCD)

library(tm)

library(wordcloud)

library(RColorBrewer)

path <- system.file("xkcd", package = "RXKCD")

datafiles <- list.files(path)

xkcd.df <- read.csv(file.path(path, datafiles))

xkcd.corpus <- Corpus(DataframeSource(data.frame(xkcd.df[, 3])))

xkcd.corpus <- tm_map(xkcd.corpus, removePunctuation)

xkcd.corpus <- tm_map(xkcd.corpus, tolower)

xkcd.corpus <- tm_map(xkcd.corpus, function(x) removeWords(x, stopwords("english")))

tdm <- TermDocumentMatrix(xkcd.corpus)

m <- as.matrix(tdm)

v <- sort(rowSums(m),decreasing=TRUE)

d <- data.frame(word = names(v),freq=v)

pal <- brewer.pal(9, "BuGn")

pal <- pal[-(1:2)]

png("wordcloud.png", width=1280,height=800)

wordcloud(d$word,d$freq, scale=c(8,.3),min.freq=2,max.words=100, random.order=T, rot.per=.15, colors=pal, vfont=c("sans serif","plain"))

dev.off()

As a second example, inspired by this post from the eKonometrics blog, I created a word cloud from the description of 3177 available R packages listed at http://cran.r-project.org/web/packages.

require(XML)

require(tm)

require(wordcloud)

require(RColorBrewer)

u = "http://cran.r-project.org/web/packages/available_packages_by_date.html"

t = readHTMLTable(u)[[1]]

ap.corpus <- Corpus(DataframeSource(data.frame(as.character(t[,3]))))

ap.corpus <- tm_map(ap.corpus, removePunctuation)

ap.corpus <- tm_map(ap.corpus, tolower)

ap.corpus <- tm_map(ap.corpus, function(x) removeWords(x, stopwords("english")))

ap.tdm <- TermDocumentMatrix(ap.corpus)

ap.m <- as.matrix(ap.tdm)

ap.v <- sort(rowSums(ap.m),decreasing=TRUE)

ap.d <- data.frame(word = names(ap.v),freq=ap.v)

table(ap.d$freq)

pal2 <- brewer.pal(8,"Dark2")

png("wordcloud_packages.png", width=1280,height=800)

wordcloud(ap.d$word,ap.d$freq, scale=c(8,.2),min.freq=3,

max.words=Inf, random.order=FALSE, rot.per=.15, colors=pal2)

dev.off()

As a third example, thanks to Jim's comment, I take advantage of Duncan Temple Lang's RNYTimes package to access user-generate content on the NY Times and produce a wordcloud of 'today' comments on articles.

Caveat: in order to use the RNYTimes package you need a API key from The New York Times which you can get by registering to the The New York Times Developer Network (free of charge) from here.

require(XML)

require(tm)

require(wordcloud)

require(RColorBrewer)

install.packages(packageName, repos = "http://www.omegahat.org/R", type = "source")

require(RNYTimes)

my.key <- "your API key here"

what= paste("by-date", format(Sys.time(), "%Y-%m-%d"),sep="/")

# what="recent"

recent.news <- community(what=what, key=my.key)

pagetree <- htmlTreeParse(recent.news, error=function(...){}, useInternalNodes = TRUE)

x <- xpathSApply(pagetree, "//*/body", xmlValue)

# do some clean up with regular expressions

x <- unlist(strsplit(x, "\n"))

x <- gsub("\t","",x)

x <- sub("^[[:space:]]*(.*?)[[:space:]]*$", "\\1", x, perl=TRUE)

x <- x[!(x %in% c("", "|"))]

ap.corpus <- Corpus(DataframeSource(data.frame(as.character(x))))

ap.corpus <- tm_map(ap.corpus, removePunctuation)

ap.corpus <- tm_map(ap.corpus, tolower)

ap.corpus <- tm_map(ap.corpus, function(x) removeWords(x, stopwords("english")))

ap.tdm <- TermDocumentMatrix(ap.corpus)

ap.m <- as.matrix(ap.tdm)

ap.v <- sort(rowSums(ap.m),decreasing=TRUE)

ap.d <- data.frame(word = names(ap.v),freq=ap.v)

table(ap.d$freq)

pal2 <- brewer.pal(8,"Dark2")

png("wordcloud_NewYorkTimes_Community.png", width=1280,height=800)

wordcloud(ap.d$word,ap.d$freq, scale=c(8,.2),min.freq=2,

max.words=Inf, random.order=FALSE, rot.per=.15, colors=pal2)

dev.off()

| iReader |

5W1H

누가: 트위터, 페북, RSS와 같은 SNS의 텍스트와 이미지

언제: 기간 쿼리도 가능한가?

어디서: 장소 쿼리도 가능한가?

무엇을:

- 트위터에서 특정키워드로 클라우드 타이포그래피 분석

- 페북에서도 마찬가지

- RSS 피드에서는 머신러닝책 예제를 돌려보자구

어떻게:

- R이나 파이썬으로 SNS의 텍스트를 분석하는게 1차 목표

- R이나 파이썬으로 SNS의 텍스트를 분석하는게 2차 목표

왜:

- 딥러닝을 통한 빅데이타와 자연어처리를 위해

언제: 기간 쿼리도 가능한가?

어디서: 장소 쿼리도 가능한가?

무엇을:

- 트위터에서 특정키워드로 클라우드 타이포그래피 분석

- 페북에서도 마찬가지

- RSS 피드에서는 머신러닝책 예제를 돌려보자구

어떻게:

- R이나 파이썬으로 SNS의 텍스트를 분석하는게 1차 목표

- R이나 파이썬으로 SNS의 텍스트를 분석하는게 2차 목표

왜:

- 딥러닝을 통한 빅데이타와 자연어처리를 위해

피드 구독하기:

글 (Atom)